It’s the Keyboard’s Fault!*

Or: Bring Back the APL Keyboard. No, Wait—Don’t. But Maybe, Kind Of.

This series is a deep dive into operators and their overloading—how they work, how they don’t, why so much confusion surrounds them, and how we might disentangle that. We have a lot of ground to cover, including some history, some misconceptions, some mathematical grounding, several entangled ideas, and the quiet but powerful ways our tools silently shape how we think.

And yes, we are confused about them. Operators originated in the precise languages of algebras, but their scope has grown as the result of historical accidents, not coherent design—and not always in a good way.

Mathematical notation is the epitome of front-loading problem-solving. Once an algebra is rigorously defined, its notation becomes a tool we can use with confidence elsewhere. You don’t need a course in real analysis to use differentiation. In effect, the notation acts as an interface to some underlying complexity, splitting learning into interface and implementation—exactly as we do with libraries and modules.

So we can’t reject operators just because they’re sometimes misused. That would be like rejecting all libraries because some are badly designed—it confuses implementation failures with conceptual flaws.

However, what does it now mean for a language to support operators? Or to implement them? Without clear definitions—which we’ll try to address toward the end—the answers grow murky, and sometimes dangerous.

Many good languages avoid extended operators, trading away structured semantics for the perceived safety that minimal syntax offers against social misuse.

The keyboard isn’t really to blame—we’ll get to encodings and feature creep—but it is definitely a villain. It triggers the others and so offers a convenient narrative entry point into a broader story of symbolic poverty, structural shortcuts, and design habits that led to today’s murky skepticism and affects every programmer, whether they ignore it or not.

In part two, we’ll cover some implementation details and misconceptions to show how “obvious implementations” really aren’t. We’ll also choose some boundaries to define a simple language front for some algebras.

In part three, we’ll dive headfirst into the mire of types, overloading, and evaluation control, before proposing some structures and strictures for a more principled use of operators.

In this first article, we’ll explore how we got here, and how we might disentangle the mess. It’s a story of adaptation and compromise: how the constraints of input devices shaped design choices—many of which became entrenched idioms—and some bad habits. We’ll look at a few languages and argue that symbolic poverty still distorts how we think about computation—distortions that pollute our definitions and quietly seep into our implementations.

So let’s get started with that history and context.

Keyboard! You parsimonious tyrant of communication!

You probably learned simple arithmetic before you learned to type. You used × for multiplication and ÷ for division—some of the first formal symbols we encounter in school. So do you know where they are on your keyboard?

These are perfectly serviceable symbols. So why do almost all programming languages use * and / instead?

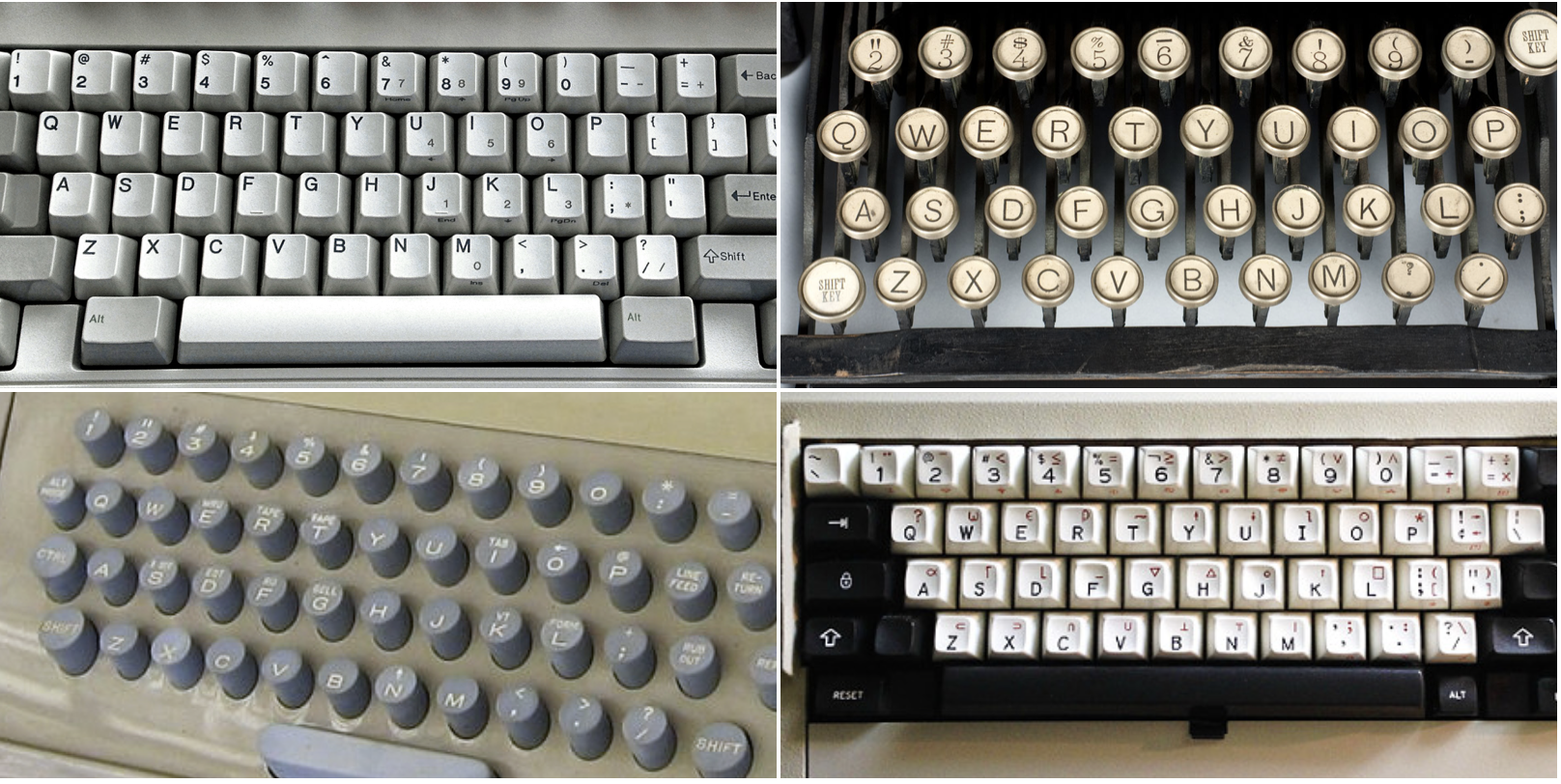

The answer starts with punch cards and early Fortran (1957). Keypunch machines like the IBM 029 (1964) or terminals like the Teletype Model 33 (1963) offered limited character sets, prioritized English punctuation, and didn’t care about mathematical symbols or other languages. Keyboards then, as now, were built for publishing and communication, not programming. There’s a clear lineage from the Remington typewriter (1878) to the Model 33 to modern layouts starting with the IBM Model M (1985). And none of them include the × or ÷ symbols we learn in our earliest schooling.

There are plenty of good "history of" sources on keyboards and early computing. What we need to trace is the interplay between symbol scarcity, keyboard hardware, and the standardized communication encodings that shaped early programming languages like FORTRAN (1957) and later C (1972), and established conventions we follow today without a second thought.

This symbol scarcity was well recognized at the time. ALGOL (1958) did have an infix syntax, but languages like COBOL (1959) and LISP (1958) sidestepped the problem by simply avoiding symbol glyphs. COBOL used an English-like syntax—for example, ADD a, b TO c—an approach still used by SQL (1973–2023) today. Rather than using the symbols of relational algebra, it encodes them in words.

At the opposite extreme was APL (~1962), which required its own keyboard layout with two additional layers of symbols and an extra ALT or APL key. This was needed because APL is a language that does everything with operators, and each operator has its own symbol glyph.

C’s approach was to use ASCII art.

Equality became ==, inequality became !=, and the arrow for member structure access became ->. It also introduced more abstract combinations like += and <<=, which were no longer simple substitutions for missing glyphs but entirely new symbolic constructs.

These are “multi-character punctuators” handled in syntax. They are syntactic sugar, not digraphs or trigraphs. Like all the definitions here, lines are fuzzy, but in principle, digraphs and trigraphs are character combinations in a reduced or non-English character set that substitute early and directly for a symbol in the ASCII character set. E.g., <% and %> are directly translated to the braces { and } before syntax parsing becomes important. Contrast this with >=, which only becomes a single token during the lexing (or equivalent) part of parsing, when the parser can use an internal encoding and isn’t bound to a human character set.

This multi-character approach was a brilliant and creative response to symbolic scarcity. While C wasn’t the first to do this, it standardized the practice—and in doing so, subtly redefined what an operator could be. We’ll return to these ideas and distinctions later.

Nonetheless, it is still a kludge. A kludge we remain enamored of to this day. Typst (2023), a modern competitor to LaTeX, includes a rich set of these symbolic ASCII forms—like ~~> or <==>—which map cleanly to their Unicode equivalents: ⟿ and ⟺. It showcases the lasting appeal of syntactic stand-ins, even with the richer symbol sets now available. The input bottleneck when using a keyboard (damn you, sir!) remains real. This isn’t just legacy inertia or punctuation cosplay; it’s a behavioral adaptation to the limits of the tools at hand.

Less brilliantly, C also reused symbols for different meanings. Particularly, parentheses () and the * symbol change meaning based on context. This symbol- or glyph-overloading wasn’t a problem in the first version, but soon became a regrettable choice. As the language became more capable, the separation between syntactic role and semantic role became blurred—a failure of what we’d now call the separation of concerns principle—and tangled the various stages of parsing.

Hindsight should cringe at the idea that these two symbols could each have three distinct context-disambiguated meanings within a single expression, yet x = tolower(*( (char*)p + (2*i) )); is perfectly understandable to anyone who knows C or any of its derivatives. And we don’t cringe. In fact, we’re so used to this kind of thing that it doesn’t occur to most of us that getting a parser to do the same disambiguation reliably is damn hard. Really damn hard.

The keyboard can't take all the blame, though. After all, modern OSes allow almost complete reprogramming of a keyboard—essential for international support—yet we don't have a standardized 'technical symbols' layer. You can buy a 'gaming keyboard' at your closest retail tech store, complete with extra keys, markings, and genuinely nice RGB affectations; but the suggestion of resurrecting MIT's Space-Cadet keyboard—with its three types of shift key and four types of meta key—brings genuine laughter, which, to be honest, it probably should.

However, this is a cultural tell: a discomfort with suggested complexity. Humans want expressive power, but only if it is familiar!

The Encoding Collaborator

We also have to look to the significant role character encodings—derived initially directly from keyboards—played as gatekeepers to what was an acceptable universe of symbols in a file format.

The broader history of encodings is large and worth telling—but for our purposes, we’ll stay focused and cherry-pick the lineage from the Model 33 through the ASCII encoding.

Keyboards were meant to facilitate human messages and temporarily translate letters into some form of mechanical or electrical format for processing. These formats were, again, constrained by those processing devices. Some punch cards, for example, used a binary encoding, but one where there were no more than three holes for any symbol, thus ensuring the paper didn't fall apart before the processing could be completed.

Bits were also expensive, limiting early encodings to five bits, and needed tricks like sending 'shift symbols' to switch character sets during transmission.

Which brings us to the influential Teletype Model 33 and 7-bit ASCII encoding.

This encoding has four blocks of 32 symbols. Extensively, the first block is transmission control codes, the second is numbers and punctuation, and the last two are upper- and lowercase letters with a few extra symbols. This mapped neatly to the fixed logic—such as it was—in the keyboard. The control key zeroed the top three bits to select the control layer, while shift was a little more complex, controlling the sixth bit for letters and the fifth bit for numbers and symbols. This 'bit pairing' was strengthened by moving a few symbols around to make all keys follow this pattern, reducing the logic and therefore cost, and made it into several standards like ECMA-23 (1975).

8-bit encodings became standard, and in 1987 ISO standardized Extended ASCII in ISO 8859-1, using exactly the same four-block bit-pairing pattern. It contained a second control block, a second symbol block, and upper- and lowercase blocks for various European languages—none of which was really of use to language designers.

However, in principle, all you needed to add was a single new 'meta' or 'alt' key to the keyboard that controlled the 8th bit and—with no other changes to the logic—you could mass-produce a fancy 'international' keyboard.

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | A | B | C | D | E | F | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0x00 | NUL | SOH | STX | ETX | EOT | ENQ | ACK | BEL | BS | HT | LF | VT | FF | CR | SO | SI |

| 0x10 | DLE | DC1 | DC2 | DC3 | DC4 | NAK | SYN | ETB | CAN | EM | SUB | ESC | FS | GS | RS | US |

| 0x20 | ! | " | # | $ | % | & | ' | ( | ) | * | + | , | - | . | / | |

| 0x30 | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | : | ; | < | = | > | ? |

| 0x40 | @ | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O |

| 0x50 | P | Q | R | S | T | U | V | W | X | Y | Z | [ | \ | ] | ^ | _ |

| 0x60 | ` | a | b | c | d | e | f | g | h | i | j | k | l | m | n | o |

| 0x70 | p | q | r | s | t | u | v | w | x | y | z | { | | | } | ~ | DEL |

| 0x80 | XXX | XXX | BPH | NBH | IND | NEL | SSA | ESA | HTS | HTJ | VTS | PLB | PLU | RI | SS2 | SS3 |

| 0x90 | DCS | PU1 | PU2 | STS | CCH | MW | SPA | EPA | SOS | XXX | SCI | CSI | ST | OSC | PM | APC |

| 0xA0 | ¡ | ¢ | £ | ¤ | ¥ | ¦ | § | ¨ | © | ª | « | ¬ | SHY | ® | ¯ | |

| 0xB0 | ° | ± | ² | ³ | ´ | µ | ¶ | · | ¸ | ¹ | º | » | ¼ | ½ | ¾ | ¿ |

| 0xC0 | À | Á | Â | Ã | Ä | Å | Æ | Ç | È | É | Ê | Ë | Ì | Í | Î | Ï |

| 0xD0 | Ð | Ñ | Ò | Ó | Ô | Õ | Ö | × | Ø | Ù | Ú | Û | Ü | Ý | Þ | ß |

| 0xE0 | à | á | â | ã | ä | å | æ | ç | è | é | ê | ë | ì | í | î | ï |

| 0xF0 | ð | ñ | ò | ó | ô | õ | ö | ÷ | ø | ù | ú | û | ü | ý | þ | ÿ |

Overall, 8859-1 was still light on technical symbols. The × and ÷ did make it in, though, just edging out the Œ and œ glyphs.

It also included something new: diacritical marks that could be used in two-letter combinations to produce accented letters. In an ironic-for-the-theme-of-this-article twist, these marks act as unital prefix operators: they modify the following character to return a new composite. However, this also breaks the fixed-size encoding view of characters.

Even when Microsoft co-opted the second control block in its CP-1252 extended ASCII standard, things on the technical symbols front didn't improve. Instead, they added eight more desperately needed quotation marks, two new variants of hyphen, and found room for the Œ and œ glyphs, while continuing to respect the bit-pairing pattern for related symbols.

Unicode adopted 8859-1 wholesale as the 'Latin-1 Supplement' block. Unicode was concerned with the much larger glyph encoding and presentation problem, which it solves quite well, and didn't pay much attention to the implications of what many considered outdated fixed 8-bit file encodings.

Not that Unicode doesn't have issues—and there's plenty written about it elsewhere. Relevant here is that it zealously ignores generation concerns, which results in it often conflating the one-dimensional nature of strings with the two-dimensional concerns of typesetting. This, in turn, makes Unicode support hard, with enough implementation friction that even in 2025 truly complete Unicode support is still rare—especially in code editors. Check out Codeblock 1D400 Mathematical Alphanumeric Symbols for an amazing kludge.

This blocking and bit pairing had some important consequences. It solidified the idea of a keyboard layer, which would become essential for multilingual support. However, it wasn't really followed through on in a coherent way. Standards made keyboards interchangeable commodities up to a point, with every manufacturer then trying to distinguish themselves by adding features of questionable utility. While this led to a vibrant keyboard modding community, it also led to application developers only supporting the lowest commonly available functions, little innovation, and no appetite for meaningfully progressing the standards.

It also led to a more subtle and persistent problem: the idea that meaningful relationships between glyphs necessarily meant there were meaningful relationships between the integers encoding the glyphs, rather than those being convenient or clever happenstance. Mistaking an encoding for the thing it represents quickly leads to problems, some of which we'll touch on again in Part 3. For example, it looks like manipulating case is easy—after all, in ASCII you just flip the sixth bit! And cue last week's BCacheFS drama about Unicode case folding.

So this is the vicious infrastructure cycle we haven’t really escaped:

Keyboards shape encodings.

Encodings shape file formats.

Formats shape editors.

Editors shape input habits and expectations.

Expectations shape keyboards.

Despite Unicode and fully programmable keyboards with IME layers and editors with custom symbol palettes, the only universal baseline is still 7-bit ASCII. Which you can still edit on a Teletype Model 33 (37 for lowercase support). Assuming you’ve got a current loop to RS-232 to USB adapter and time, of course.

From an input bandwidth perspective, this is the symbol desert we have to respect. Even if we program a beautiful math-symbol oasis, without a standard people actually use, we still live in this desert.

Feature creep

While I've focused on symbolic scarcity, it wasn't the only thing that caused feature creep in the definition and use of operators.

Types were not well understood—for example, the Curry–Howard correspondence was conjectured among theories from the late 1950s, but it wasn't published until 1980. We'll come back to the types vs. operator view in Part 3.

Implementation details and kludges also set precedents and blurred the semantic definition of operatorhood.

For example, C's functions had strictly typed fixed signatures, but its operators were type-aware—what we'd now call overloaded. This is an oversimplification, but the complexity was manageable: type promotion rules meant the + operator only needed to dispatch across ~16 combinations, all resolved statically by the compiler.

So when C needed something to return the size of a type, it couldn't use a function—type information wouldn't survive a function call boundary. So sizeof(X) looks like a function, quacks like a function... but it's an operator.

Similar shenanigans are needed for casting, oft described as C's "identifier operator ambiguity."

We also have the problem that programs have many layers to them, which are also not well delineated or defined. For example: the user experience layer, the algorithmic or computational layer, the implementation layer, the compilation and optimization layer, the execution layer, etc. As the sizeof example shows, something might look like an operator in one layer, and something quite different in another.

So is an operator something syntactic in the human-readable code, or in the concrete syntax tree (CST) that the parser builds to exactly represent that code? Or is it the node in the pre-optimized abstract syntax tree (AST) built to represent the computation the code represents? Or the post-optimization AST that represents how the computation will be done?

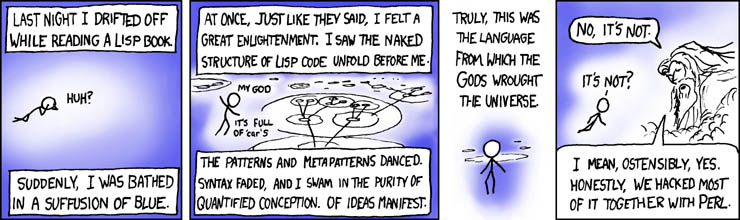

A deeper example of this problem would be Lisp, which sidesteps the problem of operators in infix notation by simply not supporting them.

In the simplest Lisps, (+ 3 4) is synonymous with (add 3 4)—+ is just another character in a name. When Lisp needs to control evaluation order—for instance, in if or a short-circuiting or—it introduces special forms rather than relying on operator semantics, which just means it treats the if function differently. + can still be considered an operator, though, because it looks like one in the AST.

An irony, though, is that with only mild oversimplification, Lisp’s syntax is entirely operator-based—built using just two operators: the parenthesis matchfix operator (...) and the prefix adjacency operator (whitespace or token sequencing). The former means “append contents to the left of the current AST node,” while the latter means “append the next component to the right.”

While this might sound like rhetorical fun—immortalized in the classic XKCD panel below—it shows an important point.

Adjacency can and often should be viewed as an operator. In standard mathematical notation, it is used all the time, usually to mean multiply-with-a-high-precedence. In OCaml, the function call myfun a b desugars into a curried form ((myfun a) b). We could even define a custom operator like let (%%) a b = a b, allowing us to write myfun %% a %% b with identical semantics. So in this context, adjacency behaves as a left-associative infix operator. Obviously, this doesn't apply in many languages, nor to all whitespace; the argument only holds when there is a meaningful semantic adjacency, but if it walks like a duck and quacks like a duck…

A similar argument can be made for other delimiters—for example, in OCaml there's little difference in the expression tree created for [1; 2; 3] and the explist (1::2::3::[]) construction with CONS operators.

Fun side note: the Unicode symbols U+2061, U+2062, and U+2063 are zero-width symbols for Function Application, Invisible Times, and Invisible Separators (comma), respectively.

In this view, parentheses and other bracket-like constructions are matchfix grouping operators. They’re often hardcoded into the grammar with special delimiters, but there's no semantic difference because they result in the same AST. Saying they are “just syntax” doesn't change the duck.

As a final example, consider a C-like cast (mytype)x. This could be read as:

- a prefix operator on

x, which is probably how you think of it; - a unitary matchfix operator on

mytypethat returns the appropriate prefix operator to apply tox, which is how the parser probably sees it; or - a binary operator that applies a type-function like

cast(mytype, x), which is probably what's in the AST.

When learning or teaching a specific language with a goal of building applications, all this is of little use—or even unhelpful. However, in a deep dive investigating operators and their problems across languages, well, as a favorite fictional detective often says, “In an investigation, ducks details matter.”

The problem is that operators can encode and implement language interactions in ways that functions cannot. As we'll see later in the discussion of short-circuiting and overloading, the only way to cleanly separate operator syntax from operator semantics is to downgrade them to have the same capabilities as functions—at which point, they can always be desugared into a call tree.

Alternatively, we could view functions and other constructs as a subset of and upgradable to operators. At which point, everything is an operator, and the definition becomes really unhelpful.

This motivates the frustratingly circular modern definition:

An operator is whatever the language implements as an operator—or charitably, anything in the syntactic class of operators.

However, from the more constructive view I've used here, we can frame operators a little differently—if we ignore macros and deliberately obfuscated constructs:

Operators are syntactically context-free, value-yielding, composable constructs that build structural expressions according to precedence and associativity rules.

In contrast, constructs like C’s for (;;){} or if (...) {} else {} are not operators. Their syntax is fixed and non-compositional—they don’t participate in expression composition or obey precedence rules. The AST structure they produce is dictated entirely by special-case grammar rules rather than compositional semantics, even if we can sometimes see operator-like structures when we squint real hard at the AST.

This definition is only slightly more helpful, but the key point is that this is a behavioral definition, grounded in compositional effect—not a circular syntactic classification based on how a particular grammar chooses to encode or tokenize the constructions. Whether something is hardcoded into a parser or built from desugared components shouldn't change its classification when the result is the same.

However, it is still an overly broad definition, because unfortunately, many things in a language really can be viewed from an operator perspective.

But also, I'm cheating: this definition comports almost exactly with operad theory—the formal mathematical framework for composable computation.

Conclusion

It's not really reasonable to try to tightly define what a modern programming language operator is. I do, however, think we can tightly define some subclasses and properly implement them without the need for something like Rust's excellent but heavy macro implementation. We can also name them with the help of a useful linguistic operator: the adjective.

In the next part, we'll simplify life by borrowing an idea from the ML languages—that it's okay for operators not to be type-overloadable—and build a general "algebraic operator" language front end. We'll then use that to build an algebra of Sets, and a subset of Geometric Algebra.

And in part 3, we'll try to disentangle the types, overloading, and evaluation control operator concepts. We'll also propose some structures and strictures for a more principled use of operators.